This series on large language models (LLMs) has looked at how LLMs can simplify chatbot development and improve user experience and how LLMs can be used with knowledge bots. Today, I want to explore how large language models can be used to validate a chatbot’s response before it is shown to the user.

This is a great technique for improving “knowledge” bots especially, making sure users only see relevant results that actually answer their questions.

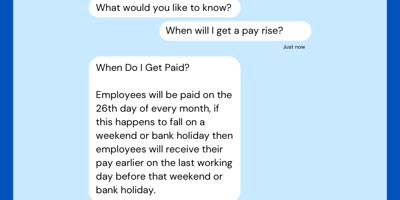

Take the example below where an employee is wanting to know “when will I get a pay rise?”:

Not only has the bot failed by not being able to answer the question, it has made things worse by returning an irrelevant response simply because it contains the word “pay”.

This does not look so smart. It would be better not to show an answer at all and display a conciliatory message instead.

LLMs can really help in this situation. There are various LLMs available including ChatGPT, BARD and Cohere.

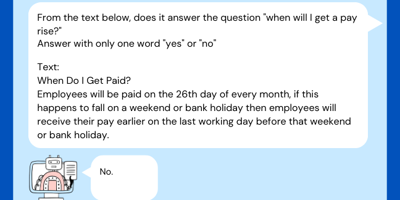

The solution is simple but very effective. Using an API call, we just pass the user’s question and the bot’s response to an LLM and ask it to confirm if the question was actually answered.

Note that we also use some “prompt engineering” to make sure the LLM only returns either “yes” or “no”:

- From the text below, does it answer the question "when will I get a pay rise?"

- Answer with only one word "yes" or "no"

- Text:

- When Do I Get Paid?

- Employees will be paid on the 26th day of every month, if this happens to fall on a weekend or bank holiday then employees will receive their pay earlier on the last working day before that weekend or bank holiday.

And here is the LLM’s response, confirming that the bot has not answered the question:

In this situation, the bot now has two options:

- Repeat – try to answer the question again from another knowledge source

- Output a conciliatory message to the user, accepting the question cannot be answered

Both of the above options are almost certainly better than showing an irrelevant response to the user.

This is the third and final article in our three-part series on large language models (LLMs). Read part one of the series, which looks at how LLMs can simplify chatbot development and improve user experience and part two which looks at using large language models with knowledge bots.

From SQL Server, to Azure, to Fabric: My Microsoft Data and Analytics journey

There is always something new in the Microsoft space, and Fabric is the latest game-changing update to impact the world of analytics.

Read moreOur recent insights

Transformation is for everyone. We love sharing our thoughts, approaches, learning and research all gained from the work we do.

Using large language models to understand a user’s intent

The first of a three-part large language model series, we explore how LLMs can simplify chatbot development and improve user experience.

Read more

Using large language models with knowledge bots

In the second of our large language model series, we explain how LLMs can greatly improve the success of “knowledge” bots.

Read more

This is a series of posts about the Internet of Things (IoT). In this first piece I give a brief overview of what it is and highlight some key enablers for current and emerging IoT technologies.

Read more