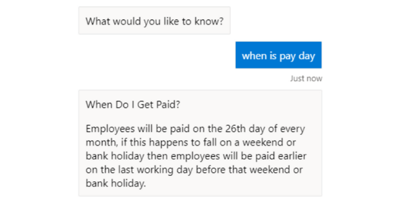

Knowledge bots may also be referred to as “FAQ bots” or “QnA bots”, they are designed to answer user questions by looking up the most relevant answers from a search engine such as Azure Cognitive Search:

Whilst knowledge bots are extremely useful for reducing the number of questions that may have to be repeatedly answered by a human, they are only as good as the size and accuracy of their knowledge base.

For example, take a large company that has 20-30 HR policy documents covering everything from benefits and maternity leave to disciplinary action. How many frequently-asked-questions (FAQs) do you have to create? You may need to create 100s, possibly 1000s.

It’s not only just the sheer number of FAQs that have to be created, it’s also the maintenance of them. For example, you may have an FAQ that uses the word “timelines” but a user may ask about “timescales”. You’ve got to deal with all these synonyms.

This is where LLMs can save the day. There are various LLMs available including ChatGPT, BARD and Cohere.

Instead of having to create 100s of FAQs, it’s possible to use an LLM to answer the question directly from the policy document!

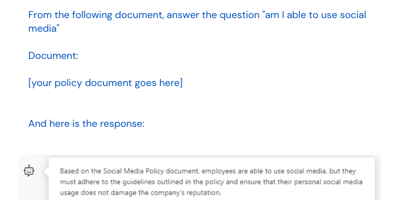

The way it works is quite simple and uses a “prompt engineering” technique. In your prompt to the LLM you need to supply both the user’s question along with all the text from the policy document.

Here is an example showing how an LLM can answer the question “am I able to use social media” using a company’s Social Media policy document as the source:

Using large language models to understand a user’s intent

The first of this three-part large language model series.

Read article

The user's question has been answered correctly from the policy document, no FAQ required!

The bot could also provide a link to the source policy document for further information, should the user wish to read it.

There are naturally some important aspects to consider:

- security and privacy:

- The user’s questions and the company’s documents are likely to contain confidential information. You should make sure your use of an LLM is private and secure e.g. the Azure OpenAI service (which you have to pay for) has better data privacy than a freely available public LLM service.

- hallucination:

- LLMs can suffer from hallucination, where the responses they give are factually incorrect or totally made up. However, using the prompt engineering technique shown above actually minimises the chance of hallucination since the LLM can only base its answer on the text you have provided as part of the prompt.

- temperature and randomness:

- LLMs often provide parameters such as temperature and randomness that enable you to adjust its levels of creativity. For a knowledge bot, it would be advisable to set these parameters to their absolute minimum (e.g. zero) so the LLM gives the same answer every time and does not make an attempt to be creative in its response

- size of document:

- LLMs do have a limit on the number of “tokens” that can be part of any prompt and answer. For example, ChatGPT 3.5 has a limit of 4000 tokens. If your documents exceed this size then you either need to shrink the documents down or consider using an alternative LLM (e.g. ChatGPT 4 can have 8K or 32K tokens, albeit at a much higher cost)

- cost:

- LLMs typically have pricing based on the number of tokens used in each prompt and answer. Since policy documents are often large, it does imply lots of tokens will be consumed in every prompt. That being said, asking a question involving a policy document with 3000 tokens should still cost you less than 0.5 pence. But you may wish to look around for an LLM that provides the most affordable pricing for your needs.

This is the second article in a three-part series on large language models (LLMs). Read part one of the series, which looks at how LLMs can simplify chatbot development and improve user experience. Stay tuned for our final article, looking at how LLMs can be used to validate a chatbot’s response.

From SQL Server, to Azure, to Fabric: My Microsoft Data and Analytics journey

There is always something new in the Microsoft space, and Fabric is the latest game-changing update to impact the world of analytics.

Read moreOur recent insights

Transformation is for everyone. We love sharing our thoughts, approaches, learning and research all gained from the work we do.

Common misunderstandings about LLMs within Data and Analytics

GenAI and LLMs have their benefits, but understanding their limitations and the importance of people is key to their success.

Read more

Shaping product and service teams

How cultivating product and service teams to support the needs of the entire product lifecycle can ensure brilliant delivery.

Read more

Building ‘The Chatbot’ - Our experience with GenAI

Learn how we harnessed to power of Generative AI to build our very own chatbot.

Read more