Azure Data Lake Storage Gen2 (or Azure Blob Storage) provides a variety of options for authorizing users or applications to access and modify blob storage content. The option that is best for you depends largely on whether the access is based on having an identity within Azure Activity Directory (Azure AD), the various options are detailed here:

- Two options to grant access to a user (or application) without requiring them to have an identity in Azure AD:

- Shared Key authorization - the caller effectively gains 'super-user' access, meaning full access to all operations on all resources including data, setting owner and changing ACLs.

- Shared access signature (SAS) authorization - SAS tokens include allowed permissions as part of the token. The permissions included in the SAS token are effectively applied to all authorization decisions. With a SAS, you have granular control over how a client can access your data but you need to setup an end date where the access will be revoked (the maximum expiration date for the SAS token is 365 days).

- Three options for grating a access to a user (or application) that has an identity defined in Azure AD:

- Role-based access control (Azure RBAC) - apply sets of permissions to an object that can represent a user, group, service principal or managed identity that is defined in Azure AD (AD). A permission set can give levels of access such as read or write access to all of the data in a storage account or all of the data in a container.

- Attribute-based access control (Azure ABAC) - Azure ABAC roles are an extension of RBAC and are used when specific conditions need to be met (ex: If User A tries to read a blob where Version ID = XXXX then access will not be allowed). A role assignment condition is an additional check that you can optionally add to your role assignment to provide more refined access control. You cannot explicitly deny access to specific resources using conditions.

- Access control lists (ACL) - gives the ability to apply a fine grained level of access to directories and files.

Exploring common issues

In this article, we'll focus on a common issue faced by many companies involving fine-grained access.

These companies have an Azure Tenancy and use Azure AD as their enterprise identity service. They have Azure Data Lake Storage Gen2 and they want their employees/other Azure resources to use it as the main storage location.

With that in mind, the question is:

"how do you setup Azure Data Lake Storage Gen2 authorization with fine grained access?"

From the description above the answer is: using Role-based access control (RBAC) and Access control lists (ACLs). (ABAC roles should be used when very specific conditions need to be met but for the purpose of this exercise RBACs are enough).

Excluding the Owner and Contributor RBAC roles, which should only be given to administrators or similar roles and have full access, the question is now: to apply the granular access required, what should be used and where?

Microsoft provides useful documentation here on RBAC and ACLs but it’s not clear the boundaries between RBAC and ACLs.

Control or data plane

There are two main aspects to consider regarding access to Azure resources, such as Data Lake Storage Gen2:

- Control plane – this authorizes whether a user/app can access a resource and with what level of permissions (e.g. RBAC). Authentication can be credential-based or identity-based. This provides high-level access to resources.

- Data plane – this provides more fine-grained control over accessing data within storage resources through Access Control Lists (ACLs). This provides more fine-grained access control.

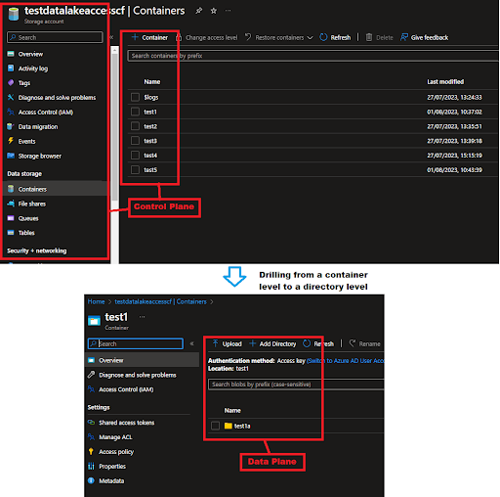

If we apply this generic concept to Azure Data Lake Storage Gen2, using the Azure Portal we can describe each plane as:

The Control plane consists of operations related to the storage account such as getting the list of storage accounts in a subscription, retrieve storage account keys or regenerate the storage account keys, etc. - Example of RBAC roles required: Reader, Contributor, Owner, … (to understand about the Built in roles available for Azure resources, please refer to: https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles)

The Data plane refers to the access of reading, writing or deleting data present inside the containers. This is supported by specific RBAC roles such as for example Storage Blob Data Owner, Storage Blob Data Contributor or Storage Blob Data Reader and also ACLs (for more details, please refer to : https://docs.microsoft.com/en-us/azure/role-based-access-control/role-definitions#management-and-data-operations)

|

|

Control Plane |

Data Plane |

|---|---|---|

|

Authorization Method |

RBAC Roles such as Reader |

RBAC Roles such as Storage Blob Data Owner, Storage Blob Data Contributor, Storage Blob Data Reader or ACLs |

RBAC roles will work on the Control Plane and Data Plane (depending on the role selected) while ACLs will only work on the Data Plane.

When, RBAC and ACL are both in place, RBAC takes the higher precedence.

Looking at example scenarios

Let’s try to understand it better by running through some scenarios/examples:

Scenario 1

Authorization requirements:

Accessing the Data Lake Storage Gen2 through the portal, view the existing containers but do not have the access to view the data inside the container.

Setup needed:

In this case, an RBAC role such as Reader is enough, since the user/group will only need access to the control plane, no ACLs are required.

Scenario 2

Authorization requirements:

Accessing the Data Lake Storage Gen2 through the portal and being able to view the data inside a container.

Setup needed:

In this case and since the requirement states the access needs to be done through the Azure Portal an RBAC role is always needed. But because the requirement also defines that accessing the container data (files/directories) is mandatory, the RBAC roles to use can’t be the one on the previous scenario. It needs to be a Data plane one such as Storage Blob Data Reader (can access and read the data of all the containers), Storage Blob Data Contributor (has full control over the containers and its data but can’t configure users/groups access and permissions), Storage Blob Data Owner (has full control over the containers and its data and can define access/permissions to users/groups), etc.

Scenario 3

Authorization requirements:

Accessing the Data Lake Storage Gen2 through an Azure Data Factory resource and being able to access the data inside all its containers.

Setup needed:

Because accessing containers data is required, RBAC control plane roles such as Reader won’t work, which leaves only Data Plane RBAC roles or ACLs. Which one to choose? In this case, and since the requirement states: “able to access the data inside all its containers”, we can use both, even though in terms of configuration Data Plane RBAC roles are easier. Why can we use both? Because the requirement states access to all containers and that is what RBAC Data Plane roles will provide. If the access should be restricted to just some containers then only ACLs will work (next scenario).

Scenario 4

Authorization requirements:

Accessing the Data Lake Storage Gen2 through an Azure Data Factory resource and being able to view the data inside a specific directory in a container.

Setup needed:

Once again the requirement defines the need to access the data plane therefore only data plane RBAC roles or ACLs will work. Data Plane RBAC roles will be applied to all the containers, we can’t restrict the access to specific containers, therefore, in this scenario, ACLs are what need to be configured.

Scenario 5

Authorization requirements:

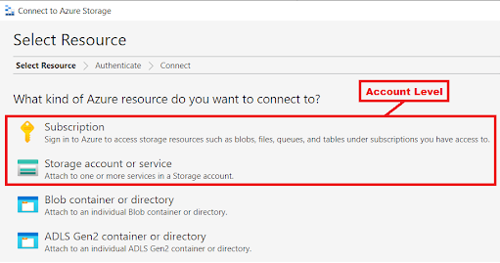

Accessing the Data Lake Storage Gen2 through Microsoft Azure Storage Explorer at an account level (Subscription or Storage account or service). The requirement is only to list the containers and the user doesn’t need to access its data.

Setup needed:

In this case, since the requirement is to access the account without accessing the containers data, this means control plane level therefore a control plan RBAC role is needed.

Scenario 6

Authorization requirements:

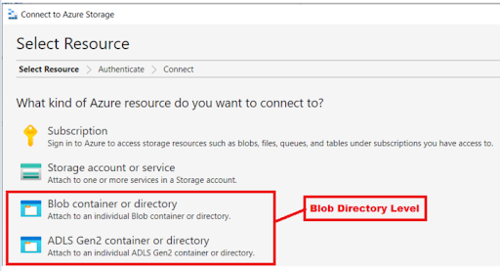

Accessing the Data Lake Storage Gen2 through Microsoft Azure Storage Explorer at a specific Blob directory level and make sure the user can read and write.

Setup needed:

In this case, since the requirement is to access a specific blob directory data using Microsoft Azure Storage Explorer, this means, only data plane level with the caveat that the user needs to be able to read and write. In this case only ACLs are needed.

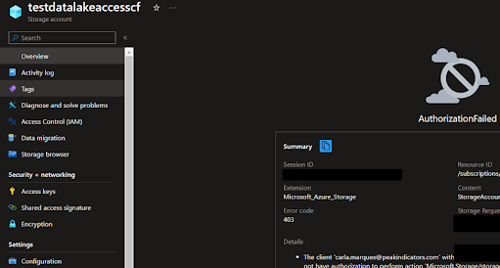

When only ACLs are defined the user won’t be able to access the account through the Azure portal. He will face an authorization failed error:

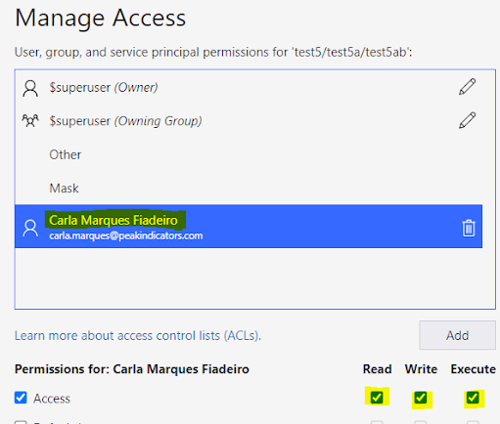

The ACLs should be setup as something similar to:

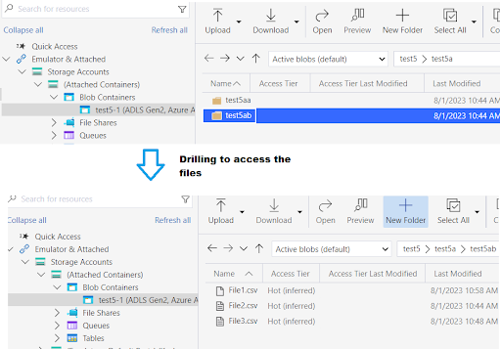

And this will allow the user to access the data:

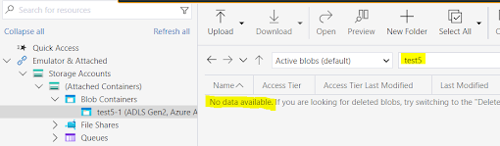

If the user tries to go up all the levels and list the containers, he won’t be able to, since this will be the control plane realm which the ACLs won’t give access to:

As a final note, from the scenarios above we can recap:

- If the access to the containers data needs to be done through the Azure portal than control and data plane is needed (RBAC + ACLs).

- If the access to the containers data is done by an external tool, then data plane is the required access and RBAC data plane roles or ACLs should be put in place.

- If the access required is only to the storage account operations such as getting the list of storage accounts in a subscription, retrieve storage account keys, etc. Then regardless of how the user will access (through the Azure portal, Microsoft Azure Storage Explorer, etc) control plane access is mandatory.

Our recent tech blog posts

Transformation is for everyone. We love sharing our thoughts, approaches, learning and research all gained from the work we do.

From SQL Server, to Azure, to Fabric: My Microsoft Data and Analytics journey

There is always something new in the Microsoft space, and Fabric is the latest game-changing update to impact the world of analytics.

Read more

Predicting and monitoring air quality

How we delivered an end-to-end forecasting solution for predicting the values of the air pollutant, PM2.5, in Newcastle.

Read more

Turbocharging Power BI performance

How external tools can improve the performance of Power BI semantic models.

Read more